How to design & evaluate user interfaces as well as interaction paradigms for accessing heterogeneous sets of 2D & 3D data involving uncertainty?

This is the question that Hugo Huurdeman tackles in Virtual Interiors’ Analytical & Experiential Interfaces project stream — in close collaboration with the other project members.

Read more about the results of the work in this blogpost.

Hugo uses his experience in Human-Computer Interaction and Information Science for designing 2D and 3D user interfaces, creating working prototypes and evaluating these with actual end-users ranging from scholars to more casual users.

Specifically, he works on a multi-layer interface [1], which currently involves two specific interface layers:

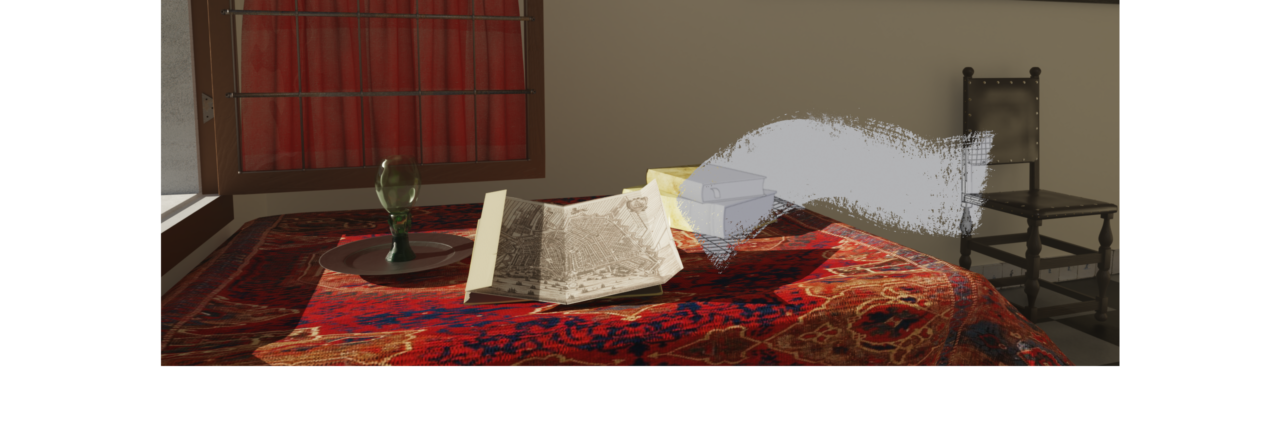

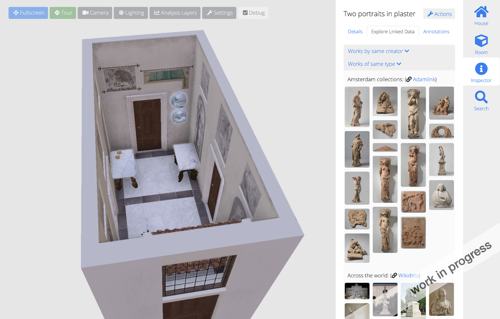

- Analytical interfaces, which allow researchers to dive into underlying research data, view uncertainties, explore associated archive documents and to follow leads to connected Linked Data, for instance examining artist biographies, related artworks and various historical data sources. These analytical interfaces are best experienced via desktop computers or tablets.

- Experiential interfaces, on the other hand, allow for experiencing historical spaces intuitively, by utilizing Virtual Reality, Augmented Reality and the constituent motion sensors of mobile phones. Casual users and researchers can “jump in” a 3D historical space, look around and interact with the objects around them. Via various spatial projections, these users can also examine related Linked Data.

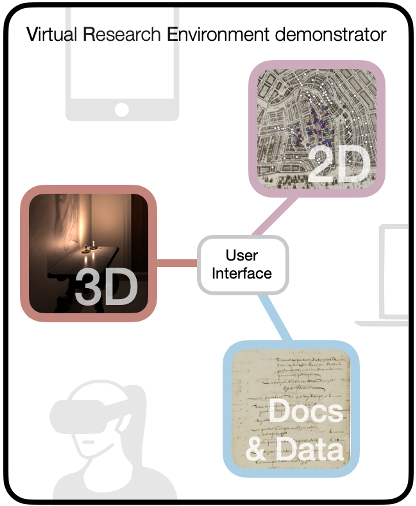

Towards a Virtual Research Environment demonstrator

Integrated approach: Instead of creating distinct prototypes for these two types of user interfaces, they actually constitute different “lenses” on the same underlying dataset and application.

Ultimately, the created user interfaces serve as a lynchpin for three modules, together forming Virtual Interiors’ Virtual Research Environment demonstrator:

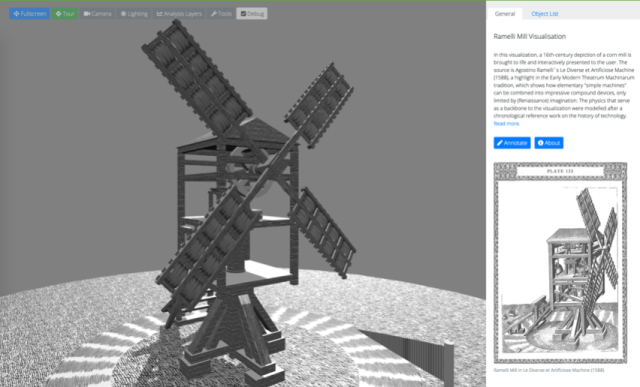

3D module: depicts 3D reconstructions & context information (uses BabylonJS)

2D maps module: shows historical maps & visualization layers (uses OpenLayers)

Documents & Data Sources: contextual documents & Linked Data.

Read more:

📖 Huurdeman & Piccoli (2021), 3D Reconstructions as Research Hubs (Open Archaeology journal)

📖 Huurdeman & Piccoli, Van Wissen (2021), Linked Data in a 3D Context (DH Benelux 2021)

Research at partner institutions

As part of his embedded research at two partner institutions of the project, Hugo focuses on two sub-projects:

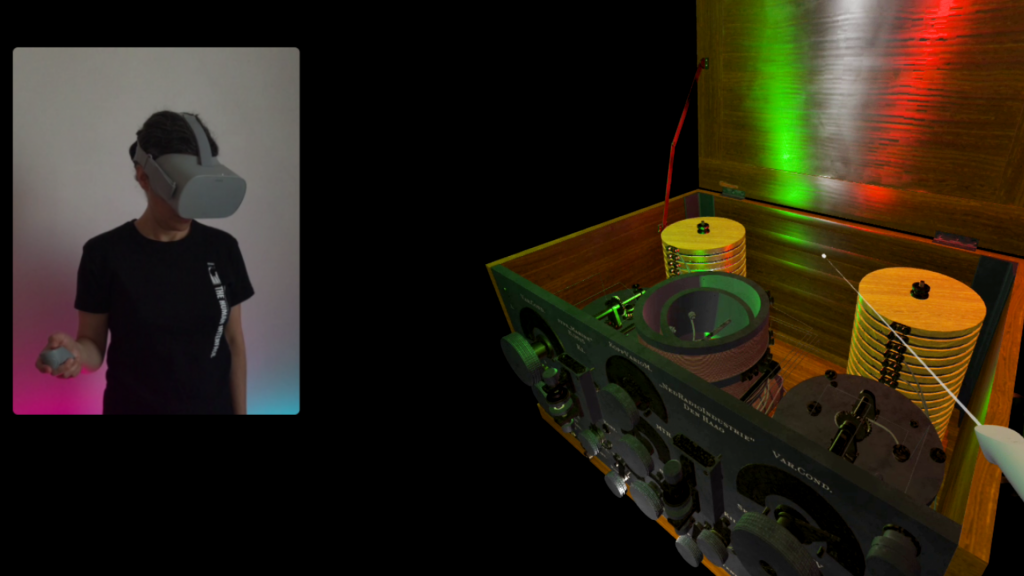

At the Netherlands Institute for Sound and Vision (NISV), Hugo collaborated with project members and historical experts on creating an interactive experience for accessing 3D historical radios in conjunction with contextual information, such as blueprints, notebook entries and photos from NISV’s archive. This prototype could be utilized via desktop computers, mobiles and VR headsets.

- 📖 Read a blogpost reporting on this work

- ▶ Try out the experimental interactive radio prototype

- ▶ View slides of a guest lecture (Museums in Context, 2021)

At Brill Publishing, Hugo collaborated with publishing and programming experts to create various building blocks, such as enhanced historical maps. The maps use some of the geospatial data from the Centers of Creativity project. Furthermore, he restored previous 3D models of scientific instruments from the Dynamic Drawings project and experimented with these in Virtual Reality and Augmented Reality settings.

- 🎞 Watch a short video recorded at Brill by Dutch broadcaster RTL Z, in which Etienne Posthumus introduces the digital work at Brill, and Hugo shows the 3D analytical and experiential Virtual Interiors interfaces

Researcher: Hugo Huurdeman. View a list of Hugo’s publications related to the Virtual Interiors project (external site).

- [1] See Shneiderman (2003); Huurdeman & Piccoli (2021), 3D Reconstructions as Research Hubs; Huurdeman & Kamps (2020), Designing Multistage Search Systems to Support the Information Seeking Process